Unveiling Clothoff.io: The Deepfake AI App's Controversial Rise

In the rapidly evolving digital landscape, artificial intelligence continues to push boundaries, creating tools that range from incredibly beneficial to deeply problematic. Among the latter, a name that has emerged with significant controversy is clothoff.io. This platform, which openly invites users to "undress anyone using AI," has ignited a fierce debate about ethics, privacy, and the legal implications of deepfake technology.

As the digital world grapples with the proliferation of AI-generated content, tools like clothoff.io stand at the forefront of a moral and legal dilemma. With its promise of digitally altering images to remove clothing, the app raises critical questions about consent, the potential for misuse, and the lengths its creators go to remain anonymous. This article delves into the mechanics of clothoff.io, its hidden origins, the severe ethical and legal challenges it presents, and the broader implications for digital safety and privacy.

Table of Contents

- What Exactly is Clothoff.io? Decoding the AI "Undress" App

- The Shroud of Anonymity: Who is Behind Clothoff.io?

- The Ethical Quagmire: Consent, Privacy, and Deepfake Pornography

- Legal Ramifications: Is Using Clothoff.io Illegal?

- Clothoff.io's Digital Footprint: Communities and Referrals

- The Developers' Perspective (or lack thereof): "Busy Bees" and Competition

- Protecting Yourself: Identifying and Combatting Deepfakes

- Conclusion

What Exactly is Clothoff.io? Decoding the AI "Undress" App

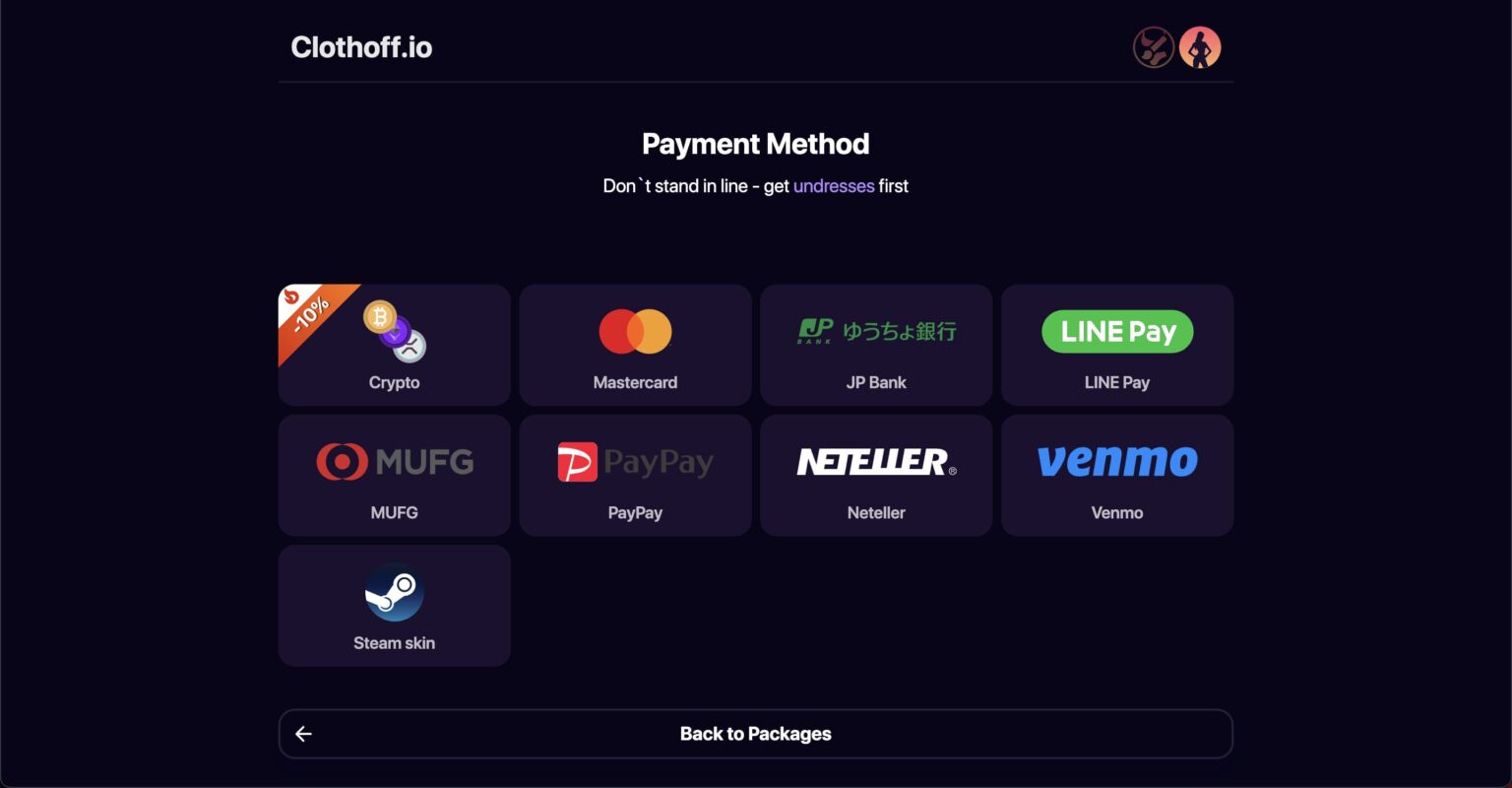

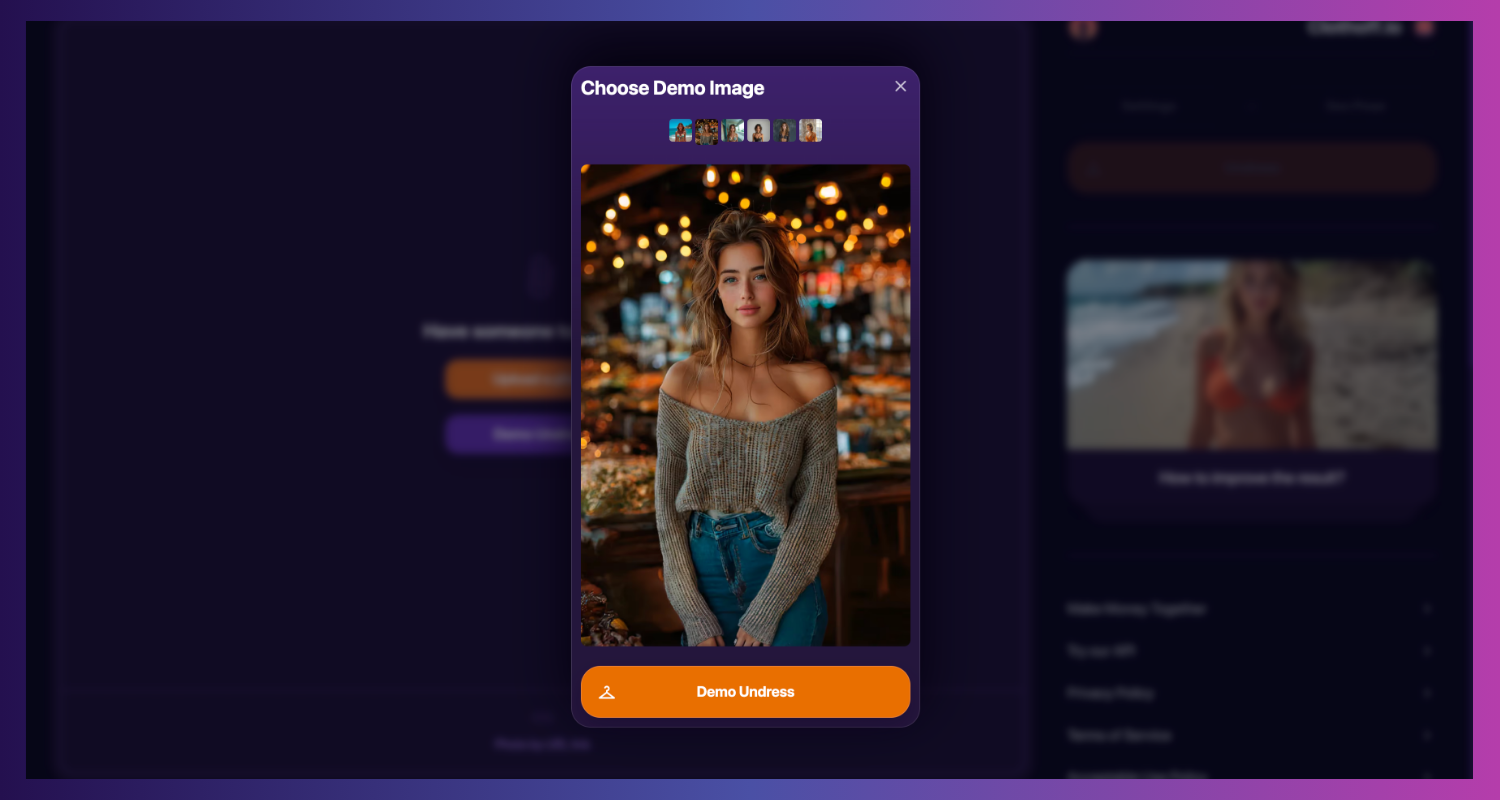

Clothoff.io presents itself as an artificial intelligence tool capable of digitally removing clothing from images. Its website, which reportedly receives more than 4 million monthly visits, boldly invites users to “undress anyone using AI.” At its core, this application leverages sophisticated deepfake technology, a branch of AI that can generate or manipulate visual and audio content with a high degree of realism. While deepfake technology itself has legitimate and beneficial applications—such as in film production, education, and medical imaging—its misuse, as exemplified by clothoff.io, raises significant alarm bells.

The app operates by taking an uploaded image and processing it through its AI algorithms to create a new version where the subject appears to be nude or partially nude. This process is often referred to as "nudity deepfake" or "non-consensual intimate imagery" (NCII). The ease of access and the explicit nature of its advertised function make clothoff.io a particularly concerning platform. Its widespread popularity, indicated by millions of monthly visits, underscores a troubling demand for such tools, highlighting a societal challenge in controlling the spread of harmful AI applications. The allure of being able to "undress" someone with just a few clicks, without their knowledge or consent, is a dangerous proposition that directly undermines personal privacy and dignity.

The Shroud of Anonymity: Who is Behind Clothoff.io?

One of the most striking aspects surrounding clothoff.io is the deliberate veil of anonymity its creators have meticulously woven around their identities. Investigations into the app's financial trails have revealed the lengths the app’s creators have taken to disguise their identities. This isn't merely a casual attempt at privacy; it suggests a calculated effort to evade scrutiny and potential legal repercussions for operating a platform with such controversial capabilities.

Specific transactions linked to the app have reportedly led to a company registered in London called Texture Oasis, a firm that appears to be part of this intricate network designed to obscure the true individuals or entities behind clothoff.io. The use of shell companies or complex corporate structures is a common tactic employed by those seeking to operate in legal gray areas or to distance themselves from potentially illicit activities. This lack of transparency not only makes it challenging for law enforcement and regulatory bodies to hold the creators accountable but also raises serious questions about the ethical compass guiding the development and deployment of such powerful, and potentially harmful, AI tools. The deliberate obfuscation of identities underscores the creators' awareness of the contentious nature of their product and their apparent desire to operate outside the conventional bounds of accountability.

The Ethical Quagmire: Consent, Privacy, and Deepfake Pornography

The very existence of clothoff.io plunges us headfirst into a profound ethical quagmire, particularly concerning consent and privacy. The names linked to clothoff.io are inextricably tied to the concept of a "deepfake pornography AI app." This immediately signals a severe breach of fundamental human rights. Creating or distributing non-consensual intimate imagery, regardless of whether it's real or AI-generated, is a profound violation of an individual's autonomy, dignity, and privacy. It is an act of digital sexual assault.

The core issue here is the absence of consent. When an individual's image is used by an AI to generate explicit content without their explicit permission, it constitutes a gross violation. This technology enables malicious actors to exploit and humiliate individuals, often women, by creating and disseminating fake explicit content that can devastate their personal and professional lives. The ease with which such content can be created and shared online means that victims face immense challenges in having it removed and in restoring their reputations. The ethical implications extend beyond individual harm to societal trust, as the proliferation of convincing deepfakes erodes our ability to distinguish between reality and fabrication, making it harder to trust visual evidence and potentially undermining democratic processes and public discourse.

The Human Cost of AI Misuse

The impact of deepfake pornography on victims is devastating. Imagine waking up to find digitally altered, explicit images of yourself circulating online, images that are completely fabricated but appear disturbingly real. The psychological toll can be immense, leading to severe anxiety, depression, social isolation, and even suicidal ideation. Victims often face public shaming, harassment, and professional repercussions, even when it is clear the images are fake. The reputational damage can be irreparable, affecting careers, relationships, and overall well-being. This is not merely a "digital prank"; it is a form of gender-based violence that leverages technology to inflict profound harm. The human cost of AI misuse, as exemplified by apps like clothoff.io, far outweighs any perceived "utility" or "entertainment" value, cementing its status as a tool for abuse rather than innovation.

Legal Ramifications: Is Using Clothoff.io Illegal?

The legal landscape surrounding deepfakes, particularly those used for non-consensual intimate imagery, is rapidly evolving but still complex. Many jurisdictions around the world are grappling with how to classify and prosecute the creation and distribution of such content. The question, "Will the developers report me and I face legal actions?" is a critical one, often asked by users who, perhaps out of curiosity or a misguided sense of harmlessness, engage with apps like clothoff.io. One user's lament, "I messed up by using AI art app to “nudity” people," highlights the potential for regret and fear of legal repercussions once the gravity of their actions sinks in.

While the developers of such apps are unlikely to report their own users (as it would expose their own operations), the act of creating or distributing non-consensual deepfake pornography is increasingly being criminalized. In the United States, several states have enacted laws specifically targeting deepfake pornography, making its creation and dissemination illegal, often with severe penalties including hefty fines and prison sentences. Similarly, countries in Europe, Asia, and Australia are implementing or strengthening laws to address this emerging threat. The legal framework often focuses on the lack of consent and the intent to harm or humiliate. Even if a user doesn't distribute the image, merely creating it can be a prosecutable offense in some regions, especially if it involves minors. The legal risks associated with using clothoff.io are significant and should not be underestimated.

Navigating the Legal Landscape of Deepfakes

The legal battle against deepfakes is multifaceted. Prosecutors face challenges in identifying perpetrators, especially when they hide behind layers of anonymity and operate across international borders. However, as technology advances, so do forensic methods for tracing digital footprints. Lawmakers are continually refining legislation to keep pace with the rapid evolution of AI. For instance, some laws focus on the intent to deceive or harm, while others take a broader approach, criminalizing any non-consensual creation of intimate imagery, regardless of intent. The legal landscape is a patchwork, but the global trend is clear: there is a growing consensus that deepfake pornography is a serious crime. Individuals who engage with platforms like clothoff.io are not only participating in unethical behavior but are also putting themselves at significant legal risk, facing potential criminal charges, civil lawsuits from victims, and irreversible damage to their own reputations.

Clothoff.io's Digital Footprint: Communities and Referrals

Beyond its official website, clothoff.io, like many online services, has established a significant digital footprint across various online communities, particularly on platforms like Telegram and Reddit. These communities serve as hubs for users to discuss the app, share tips, and, disturbingly, often share the content created using such tools. For instance, the TelegramBots community, with its 37,000 subscribers, is a place where users "share your Telegram bots and discover bots other people have made." While this community isn't exclusively for deepfake bots, it provides a fertile ground for the discussion and promotion of such tools.

The broader Reddit ecosystem also plays a role. Discussions about "referralswaps" and the ability to "expand search to all of Reddit" indicate how users seek out and share referral codes, potentially for premium access or other benefits related to these AI tools. The instruction to "Post your referrals and help out others" highlights a peer-to-peer network facilitating access to these controversial apps. While a community like CharacterAI, boasting 1.2 million subscribers, focuses on AI character interaction, its sheer size and interest in AI-driven content demonstrate a broader fascination with AI capabilities that can, unfortunately, spill over into problematic applications like clothoff.io. These communities, despite having rules like "Please do not post any scams or misleading ads (report it if you encounter one)" and urging users to "Remember to not upvote any posts to ensure it is fair for," often struggle to effectively moderate the spread of harmful content related to deepfakes, given the sheer volume and the decentralized nature of these platforms.

The Dual Nature of Online Communities

Online communities are powerful tools, capable of fostering connections, sharing knowledge, and even organizing for social good. However, they also possess a dual nature, capable of facilitating the spread of harmful content and fostering environments where unethical activities can thrive. In the context of deepfake apps like clothoff.io, these communities become breeding grounds for the exchange of problematic content and the promotion of tools that violate privacy. Despite community guidelines and moderation efforts, the scale of these platforms makes it incredibly difficult to police every interaction. The presence of referral systems and the encouragement to "help out others" can create a perverse incentive structure, further embedding these apps within certain user groups. This highlights the ongoing challenge for platform providers to balance open communication with the critical need to prevent the proliferation of illegal and unethical content.

The Developers' Perspective (or lack thereof): "Busy Bees" and Competition

From the limited public statements and observations, the developers behind clothoff.io appear to maintain a facade of benign development, despite the highly contentious nature of their product. Phrases like, "We’ve been busy bees 🐝 and can’t wait to share what’s new with clothoff," paint a picture of industrious innovation, typical of any tech startup. This carefully crafted messaging attempts to normalize the app's development, presenting it as a legitimate technological endeavor rather than a tool with significant ethical and legal ramifications. It's a classic public relations strategy to distract from the underlying issues.

Furthermore, the phrase "Ready to flex your competitive side" suggests an attempt to gamify the experience or to position clothoff.io within a competitive landscape of similar AI tools. This implies a market-driven approach, where the goal is to attract and retain users, possibly by offering new features or improved performance. This perspective, however, completely sidesteps the profound ethical and legal concerns associated with deepfake pornography. It suggests a focus on technological advancement and user engagement above all else, with little to no regard for the real-world harm inflicted by their creation. The developers' apparent detachment from the consequences of their app underscores a disturbing trend in certain corners of the tech world, where the pursuit of innovation and profit can overshadow fundamental principles of responsibility and human dignity. Their continued operation, despite the clear ethical boundaries they cross, highlights the urgent need for stronger regulatory frameworks and international cooperation to address the challenges posed by such technologies.

Protecting Yourself: Identifying and Combatting Deepfakes

In an age where AI-generated content is becoming increasingly sophisticated, knowing how to protect yourself and identify deepfakes is paramount. While apps like clothoff.io aim for realism, there are often subtle tells that can betray a deepfake. Look for inconsistencies in lighting, shadows, skin tone, and facial expressions. Blurry edges around the subject, unnatural blinking patterns (or lack thereof), and distorted background elements can also be indicators. Audio deepfakes might have unusual intonation or background noise anomalies. If something feels "off" about an image or video, trust your intuition.

Beyond identification, proactive measures are crucial. Be mindful of what you share online, as any image can potentially be used as source material for deepfakes. Adjust your privacy settings on social media platforms to limit who can access your photos. If you or someone you know becomes a victim of deepfake pornography, it's vital to act quickly. Report the content to the platform where it's hosted, contact law enforcement, and seek legal advice. Organizations dedicated to fighting online harassment and deepfake abuse can also provide support and resources. Remember, the creation and distribution of non-consensual deepfake pornography is a crime, and victims are not to blame.

The Future of Deepfake Technology and Regulation

The battle against malicious deepfakes is an ongoing one, fought on multiple fronts. Researchers are developing advanced detection tools that use AI to identify AI-generated content, creating a technological arms race. Lawmakers worldwide are working to strengthen legislation, aiming to create comprehensive legal frameworks that can effectively deter and punish perpetrators. Furthermore, platform providers are under increasing pressure to implement stricter content moderation policies and invest in AI-powered detection systems. The future will likely see a combination of technological countermeasures, robust legal frameworks, and increased public awareness campaigns to combat the spread of harmful deepfakes. It's a collective responsibility to ensure that AI serves humanity responsibly, rather than being exploited for malicious purposes that undermine trust and violate fundamental rights.

Conclusion

The emergence of clothoff.io serves as a stark reminder of the dual nature of technological advancement. While AI holds immense promise for positive change, it also carries the potential for profound harm when wielded irresponsibly. This deepfake AI app, designed to "undress anyone using AI," epitomizes the ethical and legal challenges facing our digital society. From its creators' determined efforts to remain anonymous, operating through entities like Texture Oasis, to its alarming popularity indicated by millions of monthly visits, clothoff.io highlights a deeply troubling demand for non-consensual intimate imagery.

The app's existence underscores critical issues of privacy, consent, and the devastating human cost of AI misuse. As legal frameworks struggle to keep pace with rapid technological innovation, individuals who engage with such platforms face significant legal risks. The digital communities that facilitate the spread of these tools, despite their own guidelines, further complicate efforts to control the proliferation of harmful content. Ultimately, clothoff.io is not just another app; it's a symbol of the urgent need for greater ethical responsibility in AI development, stronger legal protections for individuals, and enhanced digital literacy for all. It is imperative that we, as a society, continue to advocate for and enforce strict measures against technologies that enable abuse and violate fundamental human rights.

Have you encountered or been affected by deepfake technology? Share your thoughts and experiences in the comments below, or explore other articles on digital safety and AI ethics on our site.

Clothoff.io - AI Photo Editing Tool | Creati.ai

Is It Safe? How to use Clothoff.io | Undress AI image generator - AI

Deep Nude AI Free Trial Clothoff.io