Clothoff.io: Unpacking The Controversial AI 'Undressing' App

In the rapidly evolving landscape of artificial intelligence, certain applications push the boundaries of technology, ethics, and legality. Among these, Clothoff.io has emerged as a particularly contentious subject, sparking widespread debate and concern. This platform, which openly invites users to "undress anyone using AI," represents a significant ethical dilemma, raising critical questions about consent, privacy, and the potential for digital harm. Its existence highlights the urgent need for a deeper understanding of AI's capabilities and the profound implications of its misuse.

The controversy surrounding Clothoff.io is not merely academic; it touches upon the very fabric of digital safety and personal integrity. With its explicit functionality, the app has drawn the attention of privacy advocates, legal experts, and concerned citizens alike. As we delve into the mechanics, ethics, and potential legal ramifications of this application, it becomes clear that platforms like Clothoff.io are more than just technological novelties; they are stark reminders of the responsibilities that come with advanced AI capabilities and the imperative to protect individuals from digital exploitation.

Table of Contents

- What Exactly is Clothoff.io? Understanding its Core Function

- The Alarming Rise of Deepfake Pornography AI

- Ethical Quagmire: Consent, Privacy, and Digital Harm

- Legal Ramifications and the Fight Against Non-Consensual Content

- Community Engagement and the Spread of AI Tools

- Protecting Yourself and Others in the Digital Age

- The Future of AI-Generated Content and Regulation

- Conclusion: Navigating the Complex Landscape of Clothoff.io

What Exactly is Clothoff.io? Understanding its Core Function

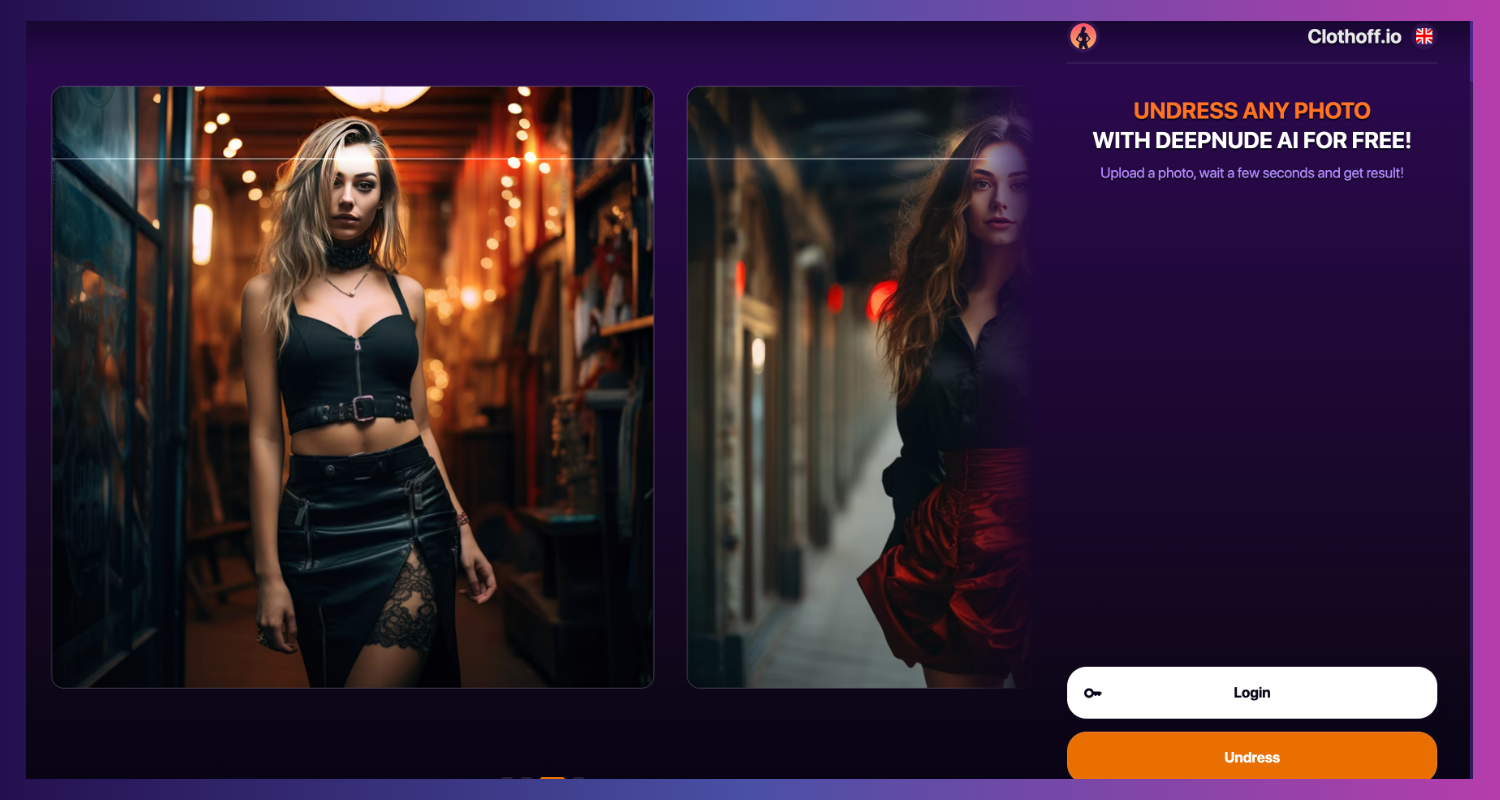

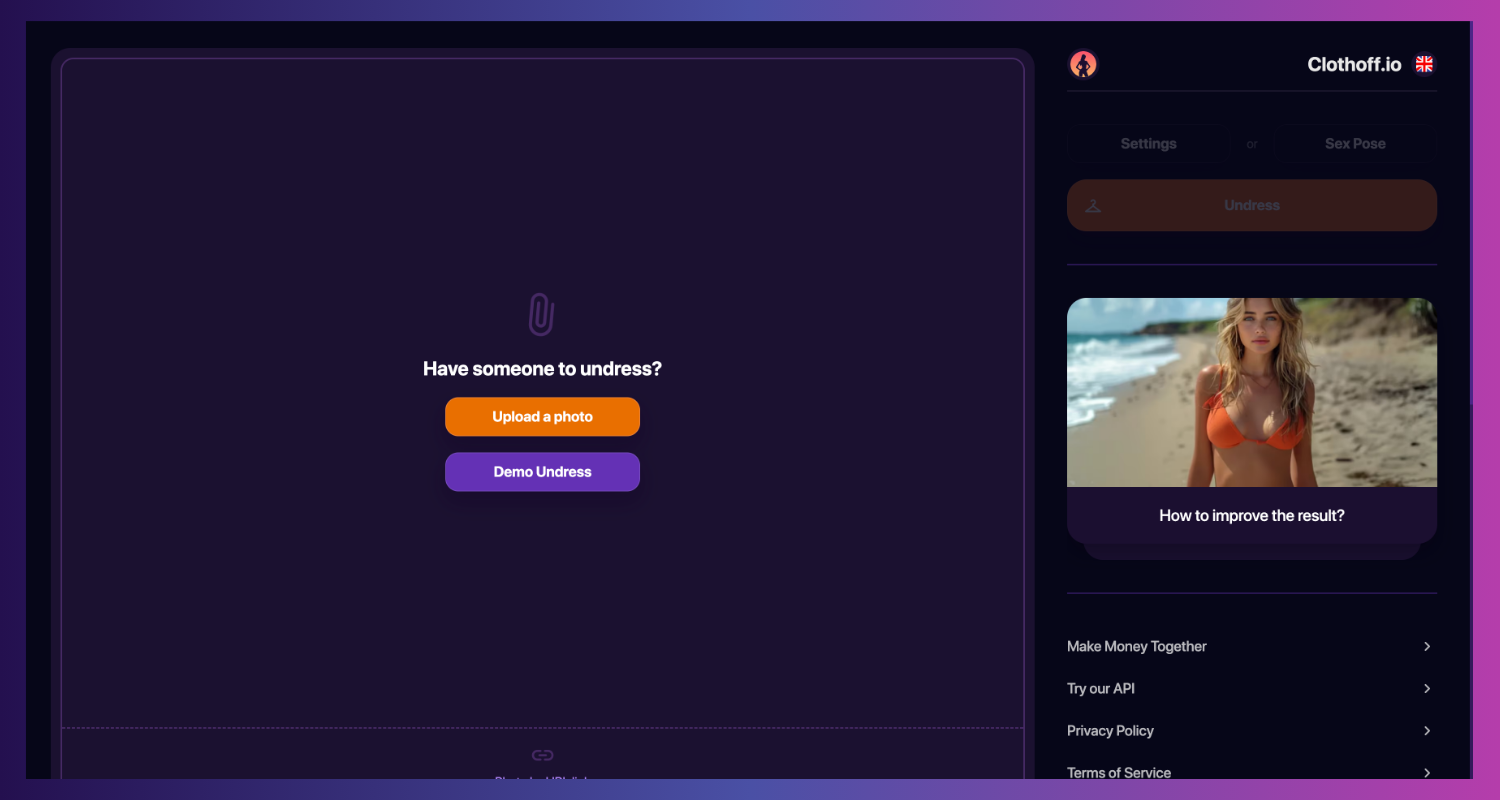

Clothoff.io is an online application that leverages artificial intelligence to remove clothing from images, effectively creating what is known as "deepfake pornography." Its website, which reportedly receives more than 4 million monthly visits, openly invites users to "undress anyone using AI." This functionality is achieved through sophisticated AI algorithms trained on vast datasets, allowing the system to convincingly generate nude or semi-nude images from clothed photographs. The process, while technologically impressive, raises immediate and severe ethical concerns due to its inherent nature of creating non-consensual intimate imagery.

The app's premise is straightforward: a user uploads an image of a person, and the AI processes it to generate a manipulated version where the subject appears undressed. This technology, often referred to as a "nude AI" or "deepnude" app, operates by overlaying synthetic skin and body parts onto the original image, making it appear as though the person is nude. The ease of access and the explicit marketing of its "undressing" capability are central to its controversy. Unlike some other AI image generators that might accidentally produce explicit content, Clothoff.io is designed specifically for this purpose, making its intent unambiguous and its potential for harm undeniable.

The Alarming Rise of Deepfake Pornography AI

The emergence of Clothoff.io is part of a broader, more alarming trend: the proliferation of deepfake pornography AI. This technology, which can create highly realistic fake videos or images, has become a significant tool for digital harassment and abuse. The term "deepfake" itself combines "deep learning" (a branch of AI) with "fake," indicating content that is synthetically generated but appears authentic. While deepfake technology has legitimate applications in entertainment and education, its misuse in creating non-consensual intimate imagery (NCII) poses a severe threat to individuals' privacy, reputation, and mental well-being.

Investigations into platforms like Clothoff.io have shed light on the shadowy world behind these applications. Excerpts from a linked investigation by The Guardian, for instance, highlight the clandestine nature of these operations and the difficulty in holding operators accountable. The investigation noted that "the names linked to Clothoff, the deepfake pornography AI app," are often obscured, making it challenging to trace ownership or enforce legal actions. This anonymity allows such apps to thrive, often operating from jurisdictions where laws against deepfake pornography are either non-existent or poorly enforced. The sheer volume of traffic, with Clothoff.io receiving millions of visits monthly, underscores the scale of this problem and the urgent need for a concerted effort to combat it.

Ethical Quagmire: Consent, Privacy, and Digital Harm

At the heart of the Clothoff.io controversy lies a profound ethical quagmire. The app fundamentally violates the principles of consent and privacy, which are cornerstones of human dignity and rights, both online and offline. When an image of a person is "undressed" without their explicit permission, it constitutes a severe invasion of their personal space and autonomy. This act is not merely a digital prank; it is a form of sexual assault and harassment, as it involves the creation and potential dissemination of intimate imagery that the individual never consented to. The psychological impact on victims can be devastating, leading to severe emotional distress, anxiety, depression, and even suicidal ideation. Their sense of safety and trust can be shattered, and their reputation irrevocably damaged.

The existence of such tools also normalizes the objectification and sexualization of individuals without their consent. It fosters an environment where digital bodies can be manipulated and exploited for gratification, blurring the lines between reality and fabrication. This has broader societal implications, contributing to a culture where individuals, particularly women and minors, are increasingly vulnerable to online abuse. The question "I messed up by using AI art app to 'nudity' people, Will the developers report me and I face legal actions?" encapsulates the ethical confusion and fear among users who may not fully grasp the gravity of their actions until after the fact. This highlights a critical need for public education on the ethical boundaries of AI and the severe consequences of misusing such technologies.

Legal Ramifications and the Fight Against Non-Consensual Content

The legal landscape surrounding deepfake pornography and applications like Clothoff.io is complex and constantly evolving. While many jurisdictions are working to enact or strengthen laws against non-consensual intimate imagery (NCII), the global nature of the internet and the anonymity afforded by some platforms make enforcement challenging. The legal fight involves both prosecuting the creators and distributors of such content and holding the platforms that facilitate it accountable. The YMYL (Your Money Your Life) principle, which emphasizes the importance of accuracy and trustworthiness for content impacting health, financial well-being, or safety, is highly relevant here, as deepfake pornography directly impacts an individual's safety and emotional well-being.

The Developers' Stance and Responsibility

The developers behind applications like Clothoff.io often operate with a degree of impunity, making it difficult to ascertain their true identities or hold them legally responsible. The provided data indicates that "in the year since the app was launched, the people running Clothoff have carefully" managed their operations, suggesting a deliberate strategy to avoid detection or legal repercussions. This careful operation often involves hosting servers in countries with lax laws, using anonymizing services, and creating complex corporate structures. This contrasts sharply with the practices of more responsible AI developers. For instance, "of the porn generating AI websites out there right now, from what I know, they will very strictly prevent the AI from generating an image if it likely contains" certain content. This implies that many legitimate AI platforms implement robust content moderation and ethical safeguards to prevent the creation of harmful or illegal imagery. Clothoff.io, by explicitly inviting users to "undress anyone using AI," bypasses these safeguards, indicating a deliberate disregard for ethical guidelines and potentially legal boundaries.

User Liability and Potential Consequences

Users of deepfake pornography apps also face significant legal risks. The question, "I messed up by using AI art app to 'nudity' people, Will the developers report me and I face legal actions?" is a common concern among those who might have used such tools without fully understanding the gravity of their actions. Indeed, in many countries, creating, possessing, or distributing non-consensual intimate imagery is a criminal offense, punishable by fines, imprisonment, or both. Laws such as revenge porn statutes or broader sexual assault laws can be applied to cases involving deepfakes. Even if the developers do not "report" users, law enforcement agencies can investigate and prosecute individuals based on complaints from victims or proactive investigations. The digital footprint left by users, even when attempting to remain anonymous, can often be traced, leading to severe personal and legal consequences. This underscores the critical importance of understanding the legal implications before engaging with such technologies.

Community Engagement and the Spread of AI Tools

The proliferation of AI tools, including those with controversial applications, is often fueled by vibrant online communities. These communities serve as hubs for sharing information, discussing new technologies, and, in some cases, promoting the use of these tools. Understanding how these communities operate provides insight into the spread of applications like Clothoff.io and the challenges in regulating them.

The Role of Referral Programs and Online Forums

Many online services, including those that might skirt ethical lines, utilize referral programs to expand their user base. Phrases like "Post your referrals and help out others" or "Truepost your referrals and help out others" indicate the common practice of incentivizing users to recruit new members. While referral programs are a standard marketing tool, in the context of controversial apps, they can contribute to rapid growth and wider dissemination of harmful content. Online forums and subreddits, such as those implied by "r/referralswaps current search is within r/referralswaps remove r/referralswaps filter and expand search to all of reddit," often serve as platforms for sharing these referrals. Community guidelines, such as "Please do not post any scams or misleading ads (report it if you encounter one)" and "Remember to not upvote any posts to ensure it is fair for," are attempts by these communities to self-regulate and maintain a semblance of order. However, the very existence of such discussions within these spaces highlights the ongoing challenge of managing content that borders on or crosses into illegality.

Broader AI Communities and Their Guidelines

Beyond the specific referral-focused communities, there are much larger ecosystems dedicated to AI development and discussion. The mention of "37k subscribers in the telegrambots community" and "1.2m subscribers in the characterai community" illustrates the massive scale of interest in AI-driven tools. These communities, while not exclusively focused on controversial applications, often discuss a wide range of AI technologies, including image generation. Within these broader communities, there's often a stronger emphasis on ethical AI development and responsible use. However, the sheer volume of users and the rapid pace of technological advancement make it difficult to prevent the spread of information about less ethical applications. The challenge lies in educating these large communities about the dangers and legal ramifications of misusing AI, ensuring that the development and sharing of AI tools remain within ethical and legal boundaries.

Protecting Yourself and Others in the Digital Age

In an era where deepfake technology is increasingly accessible, protecting oneself and others from digital harm is paramount. The first line of defense is awareness. Understanding how applications like Clothoff.io operate and the potential for misuse is crucial. Users should exercise extreme caution when sharing personal images online, especially those that might be easily manipulated. Opting for stronger privacy settings on social media platforms and being mindful of who has access to your photos can mitigate risk.

For potential victims, knowing how to respond is vital. If you discover that you have been a target of deepfake pornography, it is important to:

- **Do not blame yourself:** The responsibility lies solely with the perpetrator.

- **Document everything:** Take screenshots, save links, and gather any evidence related to the deepfake.

- **Report the content:** Contact the platform where the deepfake is hosted and request its removal. Many platforms have policies against NCII.

- **Seek legal advice:** Consult with an attorney specializing in digital rights or cybercrime to understand your legal options.

- **Contact law enforcement:** Report the incident to the police.

- **Seek emotional support:** The experience can be traumatizing. Reach out to friends, family, or mental health professionals.

The Future of AI-Generated Content and Regulation

The rapid advancement of AI technology, exemplified by applications like Clothoff.io, presents a significant challenge for future regulation. As AI becomes more sophisticated, the line between real and fake will continue to blur, making it increasingly difficult to detect and combat malicious content. This necessitates a multi-faceted approach to regulation, involving technological solutions, legislative action, and international cooperation.

Technologically, there is a growing need for robust deepfake detection tools that can identify manipulated content with high accuracy. Watermarking or digital signatures for AI-generated content could also help in tracing its origin. Legislatively, governments worldwide are grappling with how to effectively criminalize the creation and dissemination of non-consensual deepfakes, ensuring that laws are broad enough to cover evolving technologies but specific enough to be enforceable. This includes holding platforms accountable for the content they host and providing clear avenues for victims to seek redress.

International cooperation is also vital, as the internet transcends national borders. A global framework for addressing deepfake pornography could help in prosecuting perpetrators who operate across different jurisdictions. Furthermore, ethical guidelines for AI developers and researchers are becoming increasingly important. Promoting responsible AI development that prioritizes safety, privacy, and consent from the outset is crucial to preventing future abuses. The future of AI-generated content hinges on our collective ability to balance innovation with ethical responsibility and robust legal frameworks.

Conclusion: Navigating the Complex Landscape of Clothoff.io

The existence and popularity of Clothoff.io serve as a stark reminder of the complex ethical and legal challenges posed by rapidly advancing AI technology. This "deepfake pornography AI app," which explicitly invites users to "undress anyone using AI" and boasts millions of monthly visits, represents a significant threat to individual privacy and consent. As we've explored, the app operates in a morally ambiguous space, raising serious questions about the responsibilities of developers, the liability of users, and the effectiveness of current legal frameworks.

The insights gleaned from investigations, user concerns, and community discussions underscore the urgent need for a more robust response to non-consensual intimate imagery generated by AI. While the app's developers have operated "carefully" to avoid scrutiny, the potential for profound digital harm remains. It is imperative that we, as a society, continue to advocate for stronger laws, promote digital literacy, and foster a culture of ethical AI development and use. Understanding the nuances of platforms like Clothoff.io is the first step towards building a safer, more respectful digital environment for everyone.

What are your thoughts on the ethical implications of AI "undressing" apps? Have you encountered similar concerns in other online communities? Share your perspectives and insights in the comments below. Your input helps us all navigate the evolving landscape of AI responsibly. Feel free to share this article to raise awareness about this critical issue.

Deep Nude AI Free Trial Clothoff.io

Deep Nude AI Free Trial Clothoff.io

Deep Nude AI Free Trial Clothoff.io