Unmasking Clothoff.io: The Deepfake App's Hidden Depths And Dangers

In an increasingly digital world, the lines between reality and fabrication blur with alarming speed. At the forefront of this unsettling trend is clothoff.io, an AI-powered application that has garnered significant attention—and controversy—for its ability to "undress" images. With its website reportedly attracting over 4 million monthly visits, Clothoff.io represents a potent example of how advanced AI can be wielded for purposes that raise profound ethical, legal, and privacy concerns. This article delves deep into the enigmatic world of Clothoff.io, exploring its operations, the shadowy figures behind it, and the significant risks it poses to individuals and the digital landscape at large.

The allure of such an application is undeniable for some, promising the ability to manipulate images with a few clicks. However, the true cost of this digital "magic" extends far beyond mere curiosity. As we peel back the layers of anonymity surrounding Clothoff.io, we uncover a complex web of hidden identities, questionable financial transactions, and a disturbing disregard for consent and privacy. Understanding the mechanics and implications of Clothoff.io is not just about comprehending a piece of technology; it's about recognizing the profound societal challenges posed by the proliferation of deepfake content and the urgent need for digital literacy and ethical awareness.

Table of Contents

- What is Clothoff.io? Understanding the "Undress" Feature

- The Veil of Anonymity: Unmasking the Creators of Clothoff.io

- The Ethical Quagmire: Deepfakes, Consent, and the Clothoff Dilemma

- The Digital Footprint: Where Clothoff Thrives Online

- Navigating the Legal Landscape: Potential Consequences of Using Clothoff.io

- Protecting Yourself in the Deepfake Era

- The Business of Deception: Monetization and Referrals

- The Future of Deepfake Technology and Regulation

- Conclusion: Navigating the Complexities of Clothoff.io and Beyond

What is Clothoff.io? Understanding the "Undress" Feature

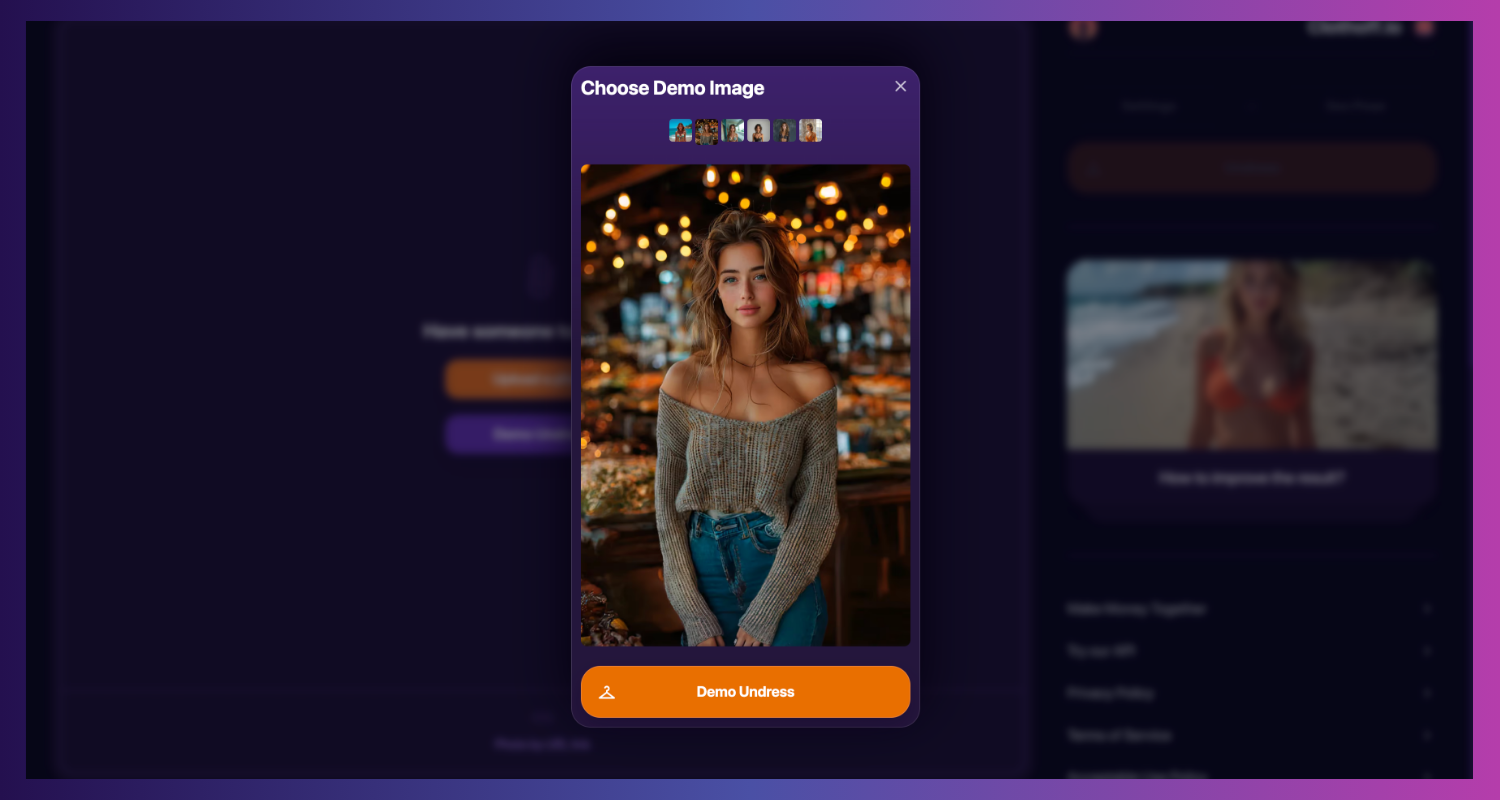

Clothoff.io is widely recognized as a "deepfake pornography AI app." At its core, the service invites users to "undress" images using artificial intelligence. This means that users can upload a picture of a person, and the AI algorithm will attempt to generate a nude or semi-nude version of that person, effectively removing their clothing digitally. The technology behind this is a form of generative AI, specifically deepfake technology, which has become increasingly sophisticated in recent years. While the underlying AI models are complex, the user interface of Clothoff.io is designed to be remarkably simple, making it accessible even to those with no technical expertise. This ease of use, combined with the controversial nature of its output, contributes significantly to its reported popularity, with its website receiving more than 4 million monthly visits. The appeal for some users lies in the ability to create highly realistic, yet entirely fabricated, images. However, this capability comes with immense ethical baggage. The term "deepfake pornography" itself highlights the problematic nature of the application, as it implies the creation of sexually explicit content without the consent of the individuals depicted. This is a critical distinction, as the technology is often used to generate non-consensual intimate imagery (NCII), a severe form of digital abuse. The very premise of Clothoff.io directly challenges fundamental principles of privacy, consent, and personal dignity, setting it apart from more benign AI image manipulation tools.The Veil of Anonymity: Unmasking the Creators of Clothoff.io

One of the most striking aspects of Clothoff.io is the concerted effort by its creators to remain anonymous. This deliberate obfuscation raises immediate red flags, suggesting an awareness of the controversial and potentially illicit nature of their operations. Investigations into the app's financial trails have provided some intriguing clues, revealing the lengths the app’s creators have taken to disguise their identities. This isn't merely about protecting privacy; it appears to be a strategic move to evade accountability for the content generated and the ethical breaches committed through their platform. Transactions related to Clothoff.io have reportedly led to a company registered in London called Texture. This discovery is significant, as it provides a tangible link to the real world, albeit one that is still shrouded in corporate anonymity. While "Texture" might be a legitimate business entity, its connection to an app like Clothoff.io, which facilitates the creation of non-consensual deepfake pornography, warrants intense scrutiny. The names linked to Clothoff, the deepfake pornography AI app, are intentionally kept out of public view, making it exceedingly difficult to identify the individuals or groups profiting from and enabling this technology. This lack of transparency is a hallmark of operations that may be operating in a legal grey area or actively seeking to avoid legal repercussions. The financial pathways, designed to obscure the ultimate beneficiaries, underscore the sophisticated nature of this anonymity strategy, making it challenging for law enforcement and privacy advocates to pinpoint those responsible.The Ethical Quagmire: Deepfakes, Consent, and the Clothoff Dilemma

The ethical implications of deepfake technology, especially when used for creating non-consensual intimate imagery, are profound and far-reaching. Clothoff.io sits squarely at the center of this ethical quagmire. The fundamental issue is consent. When an AI is used to "undress" an individual in an image, it is doing so without that person's knowledge or permission. This constitutes a severe violation of privacy and personal autonomy, transforming an individual's likeness into a tool for exploitation. The ease with which such images can be created and disseminated exacerbates the harm, leading to potential reputational damage, psychological distress, and even real-world harassment for the victims. The very existence of apps like Clothoff.io normalizes the act of digitally violating individuals, particularly women, who are disproportionately targeted by such deepfakes. It blurs the lines between what is real and what is fabricated, eroding trust in digital media and creating a fertile ground for misinformation and abuse. The ability to create convincing fake images makes it harder for victims to prove their innocence and for authorities to trace the origins of malicious content. This technology, therefore, isn't just a technical marvel; it's a social weapon that can inflict significant harm on individuals and society's collective sense of truth and safety.The Human Cost of AI "Nudity"

The impact of apps like Clothoff.io on individuals can be devastating. A user's candid admission, "I messed up by using ai art app to “nudity” people," highlights the potential for regret and the realization of the ethical boundaries crossed. This statement also raises a crucial question: "Will the developers report me and i face legal actions?" This query underscores the user's sudden awareness of the legal ramifications, shifting from a casual use of technology to a fear of criminal prosecution. This fear is well-founded, as the creation and dissemination of non-consensual intimate imagery are illegal in many jurisdictions worldwide. Victims of deepfake pornography often experience severe emotional trauma, including anxiety, depression, and a sense of violation. Their digital identity is compromised, and their personal boundaries are obliterated without their consent. The psychological toll can be immense, leading to long-term distress and a feeling of powerlessness. For those who use such apps, the consequences can range from social ostracization to severe legal penalties, including fines and imprisonment. The ease of creation belies the gravity of the offense, and the digital nature of the act does not diminish its real-world harm.The Digital Footprint: Where Clothoff Thrives Online

Despite its controversial nature and the anonymity of its creators, Clothoff.io has managed to establish a significant presence across various online communities. This widespread digital footprint indicates a concerted effort to reach users where they congregate, leveraging existing platforms to promote the app and its capabilities. The app's visibility in different online spaces contributes to its high monthly visits and its growing notoriety, making it a persistent challenge for those advocating for digital safety and ethical AI use.Telegram Bots and Community Engagement

One notable area where Clothoff.io appears to have a presence is within the Telegram bot community. With 37k subscribers in the telegrambots community, this platform serves as a hub for sharing and discovering new bots. The instruction to "share your telegram bots and discover bots other people have made" creates an environment where tools like Clothoff.io can be easily promoted and accessed. While Telegram bots themselves are neutral tools, their use in facilitating access to deepfake applications raises concerns about platform responsibility and content moderation. The ease of sharing and the large user base within these communities make Telegram an attractive channel for distributing information about such apps, potentially bypassing traditional app store restrictions.CharacterAI and the Referral Ecosystem

Another significant online community where Clothoff.io's presence is felt is within the CharacterAI community, boasting 1.2 million subscribers. This community, focused on AI character interaction, might seem unrelated at first glance. However, the mention of "Post your referrals and help out others" suggests an active referral ecosystem. While the direct connection between CharacterAI and Clothoff.io isn't explicitly stated as a direct partnership, the general culture of sharing referrals across various online services can inadvertently expose users to controversial applications. The instruction "Remember to not upvote any posts to ensure it" in the context of referrals (likely referring to preventing artificial boosting or scams) indicates a community aware of the potential for misuse, yet still participating in a system that can propagate links to apps like Clothoff.io. The presence of Clothoff.io within these vast digital ecosystems highlights the challenge of containing the spread of problematic AI tools.Navigating the Legal Landscape: Potential Consequences of Using Clothoff.io

The use of deepfake technology, particularly for creating non-consensual intimate imagery, carries significant legal risks for both the creators and the users of applications like Clothoff.io. As a YMYL (Your Money or Your Life) topic, understanding these legal ramifications is crucial, as they can have profound impacts on an individual's life, finances, and freedom. The question, "Will the developers report me and i face legal actions?" is not merely rhetorical; it reflects a genuine and serious concern. In many countries, laws are rapidly evolving to address the challenges posed by deepfake technology. Creating or sharing deepfake pornography without consent can be classified as a form of sexual exploitation, harassment, or defamation. Penalties vary by jurisdiction but can include substantial fines, imprisonment, and mandatory registration as a sex offender. For instance, in the United States, several states have enacted laws specifically targeting non-consensual deepfake pornography, making its creation and distribution illegal. Similarly, the UK, Australia, and various European Union countries are strengthening their legal frameworks to combat this form of digital abuse. Even if the developers of Clothoff.io do not directly report users, law enforcement agencies can initiate investigations based on victim reports or proactive monitoring. The digital trail, including IP addresses, payment records, and shared content, can often be traced back to the user. Furthermore, victims can pursue civil lawsuits for damages, leading to significant financial liabilities for those involved in creating or disseminating such content. The anonymity sought by the app's creators also suggests their awareness of these legal dangers, attempting to insulate themselves from prosecution while users may inadvertently expose themselves to severe legal consequences.Protecting Yourself in the Deepfake Era

In an age where deepfake technology is becoming increasingly sophisticated and accessible through platforms like Clothoff.io, protecting oneself and others is paramount. This involves a multi-faceted approach, combining digital literacy, critical thinking, and proactive measures. Firstly, cultivate a healthy skepticism towards online content. Always question the authenticity of images and videos, especially those that seem too shocking or controversial. Cross-reference information from multiple reliable sources before accepting it as truth. Secondly, be mindful of your digital footprint. Every image or video you share online can potentially be used by malicious actors. Adjust privacy settings on social media platforms, limit the amount of personal information you share, and be cautious about who has access to your images. For individuals who may have inadvertently used apps like Clothoff.io, understanding the potential legal and ethical repercussions is the first step. If you have created or shared non-consensual deepfakes, seek legal counsel immediately to understand your rights and potential liabilities. For victims of deepfake pornography, it is crucial to report the content to the platform where it is hosted, contact law enforcement, and seek support from victim advocacy groups. There are organizations dedicated to helping individuals remove such content and cope with the psychological impact. Educating yourself and others about the dangers of deepfakes and promoting responsible AI use are vital steps in building a safer digital environment.The Business of Deception: Monetization and Referrals

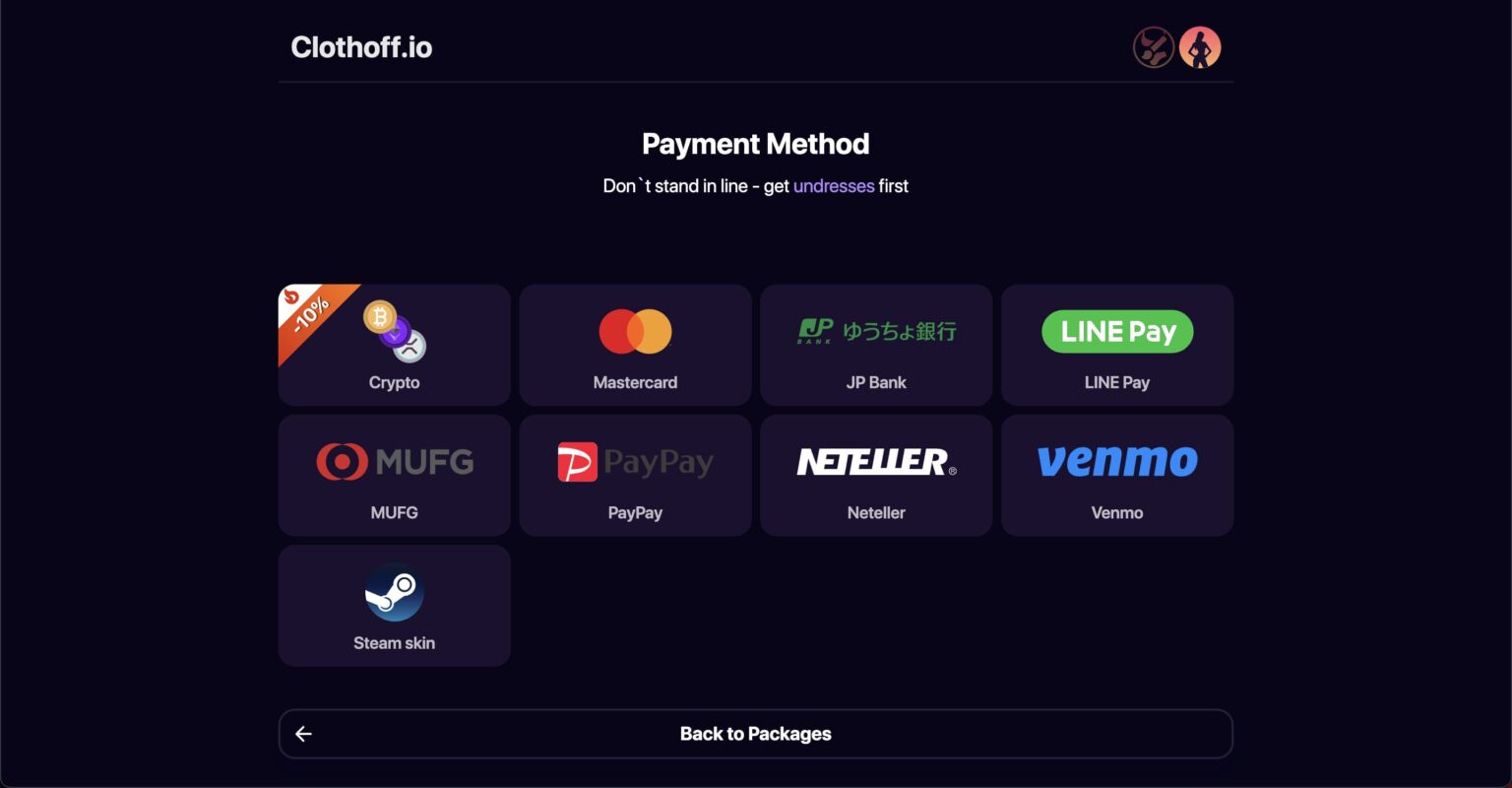

The operation of Clothoff.io, despite its controversial nature, is inherently a business endeavor. The mention of "Payments to clothoff revealed the lengths the app’s creators have taken to disguise their identities" clearly indicates a financial model at play. While the exact pricing structure isn't detailed, it's evident that users are paying for the service, and these payments are routed through channels designed to obscure the ultimate recipients. This financial aspect underscores that the app is not merely a technological experiment but a commercial enterprise capitalizing on the demand for deepfake content. The choice of a London-registered company, "Texture," as a recipient of these transactions, further highlights an attempt to legitimize or at least formalize the financial flow, even while the true beneficial owners remain hidden. The monetization strategy likely involves a subscription model or pay-per-use system, encouraging repeat engagement from users. The emphasis on disguising identities through these payment channels suggests a deliberate effort to avoid legal and financial scrutiny, given the nature of the service provided. This financial opacity is a common characteristic of operations involved in activities that might be considered illegal or unethical, as it complicates tracking and accountability.The Referral Economy and Its Risks

Beyond direct payments, the app also appears to leverage a referral system to expand its user base. The phrase "Post your referrals and help out others" indicates an active community where users are encouraged to bring in new customers. This is a common growth strategy for many online services, but in the context of Clothoff.io, it takes on a more sinister tone. Users are effectively incentivized to spread an application that facilitates the creation of non-consensual intimate imagery. The advice, "Please do not post any scams or misleading ads (report it if you encounter one)," suggests that even within these referral communities, there's an awareness of malicious practices. However, the inherent "scam" or "misleading" nature of promoting an app like Clothoff.io, given its potential for harm and illegality, is often overlooked by those seeking to gain from referrals. The instruction "Remember to not upvote any posts to ensure it" (likely meaning to prevent artificial boosting or to ensure fair distribution of referral opportunities) indicates a community attempting to self-regulate, but the core activity remains problematic. The referral system effectively turns users into unwitting marketers for a potentially harmful service, expanding its reach and impact across digital platforms.The Future of Deepfake Technology and Regulation

The emergence and proliferation of applications like Clothoff.io underscore a critical challenge for the future of technology and society: how to regulate rapidly advancing AI capabilities that have the potential for significant harm. As AI models become more sophisticated, the ability to generate hyper-realistic fake content will only increase, making it harder to distinguish truth from fabrication. This necessitates a proactive and adaptive approach to regulation. Governments worldwide are grappling with this issue, exploring various legal and policy frameworks. These include laws specifically criminalizing the creation and distribution of non-consensual deepfake pornography, requiring platforms to implement robust content moderation, and mandating transparency about AI-generated content. However, the global nature of the internet and the anonymity favored by operators like those behind Clothoff.io make enforcement incredibly challenging. International cooperation and harmonized legal standards will be essential to effectively combat the misuse of deepfake technology. Beyond legal measures, technological solutions, such as digital watermarking and provenance tracking, are being developed to help identify AI-generated content. Ethical guidelines for AI developers and a greater emphasis on digital literacy and media education are also crucial. The future will likely see a continuous cat-and-mouse game between those who exploit AI for malicious purposes and those who seek to protect individuals and maintain trust in the digital realm. The case of Clothoff.io serves as a stark reminder of the urgency of this ongoing battle.Conclusion: Navigating the Complexities of Clothoff.io and Beyond

The journey into the world of Clothoff.io reveals more than just an AI application; it exposes a complex ecosystem where technological innovation meets profound ethical dilemmas and legal risks. From its reported 4 million monthly visits to the shadowy company "Texture" in London, the operations of Clothoff.io are designed to be both widely accessible and deeply anonymous. The app's core function—the non-consensual "undressing" of images—highlights a critical societal challenge: the weaponization of AI for digital abuse. The concerns raised by users about legal actions and the ethical implications of creating AI "nudity" underscore the significant personal and legal consequences associated with such tools. Furthermore, the app's presence across communities like Telegram bots and CharacterAI, facilitated by referral systems, demonstrates the pervasive nature of its reach and the challenges in controlling its spread. As we've explored, the legal landscape is evolving, but the fight against deepfake misuse requires vigilance, robust regulation, and a collective commitment to digital ethics. We encourage you to share your thoughts on the ethical implications of deepfake technology and applications like Clothoff.io in the comments below. What measures do you believe are most effective in combating the misuse of AI? Your insights contribute to a vital conversation about the future of our digital world. For more in-depth analyses of emerging technologies and their societal impacts, explore other articles on our site.

Clothoff.io - AI Photo Editing Tool | Creati.ai

Is It Safe? How to use Clothoff.io | Undress AI image generator - AI

Deep Nude AI Free Trial Clothoff.io